Portfolio

Table of Contents

Visual Reasoning with Reinforcement Learning #

We explore how reinforcement learning can improve visual question answering by training models to reason more accurately and ground their answers visually. Our method boosts performance, especially in out-of-domain scenarios, and reveals trade-offs between accuracy and reasoning alignment.

Decoding Brain Activity with Pretrained Classifiers #

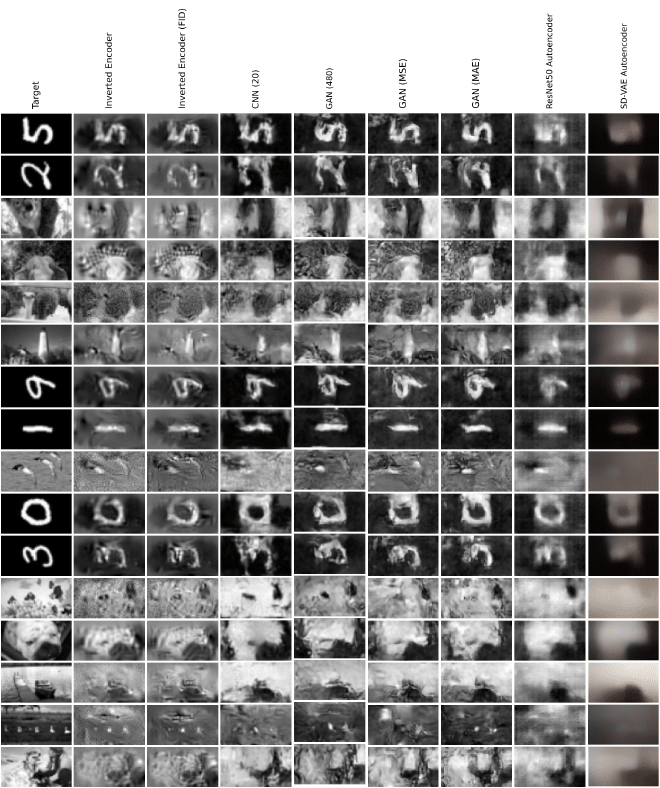

In this project, we explored how to reconstruct visual stimuli from brain recordings in the mouse visual cortex. My focus was on leveraging pretrained image classifiers—specifically ResNet50—to decode these neuronal signals in a data-efficient way.

I designed a two-part system:

- First, I mapped brain activity into the latent space of a pretrained ResNet50 layer known to align well with V1.

- Then, I trained a custom decoder using transposed convolutions to reconstruct full-resolution images from those features.

As you can see, other approaches outperformed my method (ResNet50 Autoencoder), but it was still able to reconstruct images with reasonable fidelity. Unlike other methods, it could use images in their original resolution, highlighting the benefit of using biologically aligned intermediate representations. It also required no retraining of the pretrained components, making it practical for low-data neuroscience settings.

The project taught me a lot about representation learning, training decoders in frozen-pipeline setups, and the limitations of transfer learning in domain-shifted settings like brain data.

Read the full report for more details.

Transformers and Multi-Modality #

In a course Visual intelligence : machines and minds, I implemented the transformer architecture in PyTorch and trained minified versions of various vision and language models, such as GPT, MaskGIT and 4M. This work deepened my understanding of attention mechanisms, modality-specific tokenization, and training dynamics in generative and multi-modal settings.

Below is an example output from Nano4M: Given a single RGB image (left), the model predicts both the depth map (center) and surface normals (right), demonstrating its capacity for rich 3D scene understanding from a single visual input.

|  |  |

|---|

Any Question Any Place #

LauzHack 2024 – AXA Award for Best Use of Small Models

This AI-powered platform was built during a 24-hour hackathon and combines large language models (LLMs) with computer vision (CV) to let users ask natural language questions about satellite images—such as “How many ships are there larger than 20 meters?” or “What area is covered by forest?” The system interprets the question, selects appropriate CV tools, and returns a human-readable answer.

Our team won the AXA Award for Best Use of Small Models, recognizing our efficient design that used lightweight CV models to deliver fast and accurate analysis.

Key Features:

- Natural language interface for satellite image analysis

- Object counting and filtering by attributes (e.g., size, position)

- Land use segmentation (e.g., forest, water, urban)

- Modular backend makes it easy to add new models and tools

Check out the GitHub repository.

Designing a Programming Language and its Compiler #

We designed and implemented Um—a compiled programming language focused on ease of use. Features include parsing, scope and type checking, code generation, ergonomic concurrent execution, and a comprehensive test suite. The compiler was built in Haskell using ParSec and Sprockell.

For example, consider the following Python code:

import threading

total = 0

lock = threading.Lock()

def thread_function(thread_id):

global total

with lock:

total += thread_id

threads = []

for i in range(4):

t = threading.Thread(target=thread_function, args=(i,))

threads.append(t)

t.start()

for t in threads:

t.join()

print(total)

This program can be expressed in Um as:

g l lock

g i total

// 4

sync lock

total total+/

.

.

p total

You can learn more about Um syntax and features or explore the compiler code.

Ergonomic Wireless Keyboard #

I designed and built a custom wireless ergonomic keyboard called Prototype. It is powered by nice!nano running ZMK firmware, which allows for extensive customization of key mappings and macros. The keyboard is designed to be comfortable for long typing sessions, with a split layout that reduces strain on the wrists.

ParityGames.io #

ParityGames.io is an interactive web app for visualizing, editing, and solving parity games—built for researchers and educators. Features include intuitive diagram editing, automatic layout, undo/redo, and step-by-step solver trace visualization (e.g., Zielonka). It supports both native algorithm integration in TypeScript and importing traces from external tools.

Try it online or take a look at the code.